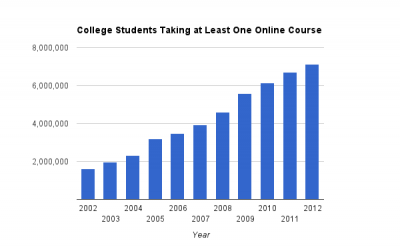

Chart created by Jill Barshay using Google Docs. Source data: Babson Research Group, “Grade Change,” January 2014

Online education has grown so fast that more than a third of all college students — more than 7 million — took at least one course online in 2012. That’s according to the most recent 2014 annual survey by the Babson Research Group, which has been tracking online education growth since 2002. Yet nagging worries remain about whether an online education is a substandard education.

The Babson survey itself noted that even as more students are taking online courses, the growth rate is slowing and some university leaders are becoming more skeptical about how much students are really learning. For example, the percent of academic leaders who say that online education is the same or superior to face-to-face instruction dipped to 74 percent from 77 percent. That was the first reversal in a decade-long trend of rising optimism.

Opinions are one thing, but what do we actually know about online learning outcomes? Unfortunately, there have been thousands of studies, but precious few that meet scientific rigor. One big problem is that not many studies have randomly assigned some students to online courses and others to traditional classes. Without random assignment, it’s quite possible that the kinds of students who choose to take online courses are different from the kinds of students who enroll in traditional courses. Perhaps, for example, students who sign up for online classes are more likely to be motivated autodidacts who would learn more in any environment. Researchers can’t conclude if online learning is better or worse if the student characteristics are different in online courses at the onset.

On June 3, 2014, an important study, “Interactive learning online at public universities: Evidence from a six-campus randomized trial,” published earlier in Journal of Policy Analysis and Management, won the highest rating for scientific rigor from What Works Clearinghouse, a division inside the Department of Education that seeks evidence for which teaching practices work. The study was written by a team of education researchers led by economist William G. Bowen, the former president of Princeton University and the Andrew Mellon Foundation, and Matthew M. Chingos of the Brookings Institution.

The online study looked at more than 600 students who were randomly assigned to introductory statistics classes at six different university campuses. Half took an online stats class developed by Carnegie Mellon University, supplemented by one hour a week of face-to-face time with an instructor. The other half took traditional stats classes that met for three hours a week with an instructor. The authors of the study found that learning outcomes were essentially the same. About 80 percent of the online students passed the course, compared with 76 percent of the face-to-face students, which, allowing for statistical measurement errors, is virtually identical.

The university campuses that participated in the fall 2011 trial were the University at Albany (SUNY), SUNY Institute of Technology, University of Maryland Baltimore County, Towson University (University of Maryland), Baruch College (CUNY) and City College (CUNY).

It’s worth emphasizing that this was not a pure online class, but a hybrid one. One hour a week of face-to-face time with an instructor amounts to a third of the face-to-face time in the control group. Also, the Carnegie Mellon course not only required students to crunch data themselves using a statistical software package, but it also embedded interactive assessments into each instructional activity. The software gives feedback to both the student and the instructor to let them know how well the student understood each concept. However, the Carnegie Mellon software didn’t tailor instruction for each student. For example, if students answered questions incorrectly, the software didn’t direct them to extra practice problems or reading.

“We believe it is an early representative of what will likely be a wave of even more sophisticated systems in the not-distant future,” the authors wrote.

The Department of Education reviewed only the analysis of passing rates in the Carnegie Mellon stats class trial. But Bowen and the study’s authors went further, and found that final exam scores and performance on a standardized assessment of statistical literacy were also similar between the students who took the class in person and online. They also conducted several speculative cost simulations and found that hybrid models of instruction could save universities a lot of money in instructor costs in large introductory courses in the long run.

But in the end, the authors offer this sobering conclusion:

In the case of a topic as active as online learning, where millions of dollars are being invested by a wide variety of entities, we might expect inflated claims of spectacular successes. The findings in this study warn against too much hype. To the best of our knowledge, there is no compelling evidence that online learning systems available today—not even highly interactive systems, of which there are very few—can in fact deliver improved educational outcomes across the board, at scale, on campuses other than the one where the system was born, and on a sustainable basis. This is not to deny, however, that these systems have great potential. Vigorous efforts should be made to continue to explore and assess uses of both the relatively simple systems that are proliferating, often to good effect, and more sophisticated systems that are still in their infancy. There is every reason to expect these systems to improve over time, and thus it is not unreasonable to speculate that learning outcomes will also improve.